What is Cache Memory?

Cache Memory Definition: Cache memory is variant of DRAM (Dynamic RAM) that embedded into the CPU (processor) or between CPU and main memory of computer system that helps to access data or instructions from computer’ memory Primary and Secondary Memory with higher efficiency. This memory works as a temporary storage unit that trashed when power get turn-off. This memory is more readable form to access for the CPU chip compare to computer’s primary memory and locality of reference in OS.

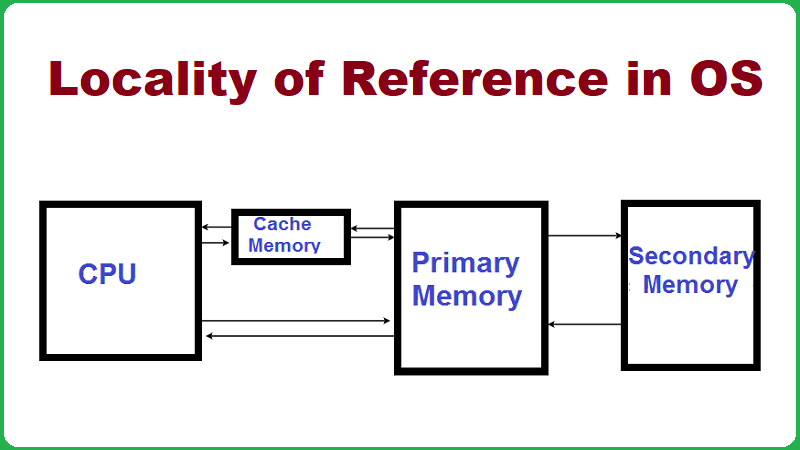

Cache Memory Diagram

Cache memory has speed higher like as between 10 to 100 times faster to Random Access Memory (RAM), so it is more expensive to primary memory of computer system. Main objective of designing cache memory is to decrease the average time to retrieving from primary memory, and it helps to save some piece of data that used regularly in primary memory unit.

Importance of Cache Memory

CPU Cache memory plays very important role for memory unit in the computer system because it offers the principal assistant to enhance the computer’s performance. It has limited space but having higher speed compare to main memory.

As well as it is also more expensive to primary memory. Cache memory places in between the processor registers and RAMs (Random Access Memory).

Cache memory is located on the two places Motherboard and Processor. If it located on the motherboard then it has name the as “External Cache Memory” as well as “L2”, and its speed is very high.

External cache memory is available in different range like as 256 Kb, 512 Kb, 1 Mb, and 2 MB. If it found on the processor then you can say the “Internal Cache Memory”, but its speed much high compare to External Cache Memory. This is found on the Pentium III and up to.

Types of Cache Memory

Two types of Cache Memory are using in CPU. Such as –

Primary Cache Memory – This cache memory embedded directly on the processor (CPU), and it is small size but having less access time to registers.

Secondary Cache Memory – This memory is located in between the primary cache and main memory. It has higher access time to Primary Cache Memory.

Level of Cache Memory

Level 1 (L1) Cache

L1 has another name the “Primary Cache Memory” that developed with SRAM, and it has small size to left caches but larger then CPU’s registers. It has some limitation to storage is between the 2KB to 64KB but it totally depend upon the computer processor.

It is located on the register in microprocessor. CPU firstly searches all instructions in the L1 cache then other. In L1, some registers are using like as accumulator, Program counter, address register etc.

Level 2 (L2) Cache

L2 is also called “Secondary Cache Memory” that placed between the CPU and Dynamic RAM. It is large size but slow speed to L1 cache. L2 has storage capacity between 64kb to 2MB. Both cache (L1 and L2) work simultaneously because if some data get missing in L1 then L1 instantly retrieves data from L2 cache memory that also made of SRAM.

Level 3 (L3) Cache

L3 is also known as “Main Memory”. L3 has large size to L1 and L2 as well as slower to them, and its storage capacity between 1MB to 8MB. All data get delete in L3 when power gets turn off. It helps to decrease time delay between request and access of data from main memory.

Cache Memory Size

- L1 Cache – Between 2KB to 64KB

- L2 Cache – Between 64kb to 2MB

- L3 Cache – 1MB to 8MB

Function of Cache Memory

Cache memory is also using to perform various functions. So

Use of Cache Memory

- CPU sends the numerous requests respect to content of different memory location.

- Search entire cache for those data.

- If, data get exist then fetches needed block of data from primary memory to cache memory.

- Then move it from cache memory to CPU.

- Cache makes communication to all tags that identified as well as exist in every cache slot.

Advantages of Cache Memory

- Higher speed to primary memory.

- Less access time than primary memory (RAM).

- Temporary storage for execution instruction in short time duration.

- Cache always ready to execution.

- Enhance performance of computer system.

Disadvantages of Cache Memory

- Less storage capacity

- More expensive

- Not permanent storage memory

Locality of Reference in OS

Definition: Locality of reference is the tendency of computer system programs that tried to access those addresses location that are closest to another memory address.

Also Read: Virtual Memory in OS and its Examples, Types, and Benefits

The principle of locality of reference is based on their loops and subroutine calls of programs.

If, programs come in the loops then CPU sends various set of instructions, which constructed by loop.

If, subroutine calls fire then all group of instructions retrieved from memory unit.

References to data items also get localize that means same data item referenced again and again.

In this diagram, If CPU wish to obtain or fetch several data then firstly it prefers to cache memory because it is closest to it, as well as offers higher access speed. If appropriate data found by CPU, then this data will retriev, and this condition is known as “Cache Hit”.

But needed instructions didn’t search in the cache memory area then this case has another name “Cache Miss”.Then CPU finds the primary memory for searching required data, if data is found then one conditions is fired from two methods such as:

- First method is that CPU must be reading the appropriate data or instructions, and use it but in future if need same data then CPU again try to access the same primary memory for using it, but this memory unit is slowest to access data.

- Second method, required data or instructions saved in to cache memory unit because if in future same data required then this data or instruction can access with fastest.

Functions of Locality of Reference

The principle of locality of reference is based on the five functions such as –

Types of Locality of Reference in OS

Temporal Locality: In temporal locality, such data or instructions are fetched which required in sooner for execution. So, those data save in the cache memory because when we try to search same data in the primary memory, then it may be trash. This process is called the “Temporal Locality”.

Also Read: Real Time Operating System with its Examples, Applications, & Functions

Spatial Locality: Spatial locality is totally different to temporal locality because in the Spatial Locality such data or instruction traced , which are near to current memory location, that easily fetched when they get demand in near future. This entire process is known as “Spatial Locality”.

Memory Locality: In the memory locality, memory uses as explicitly by spatial locality.

Branch Locality: If the spatio-temporal coordinate space has only a few possible alternatives to the possible part of the path. This is the case when an instruction loop has a simple structure, or the possible result of a small system of conditional branching instructions limited to a small set of possibilities. Branch areas are generally not a spatial locality because some possibilities may be located far away from each other.

Equidistant Locality: It is half between the spatial locality and the branch locality. Consider locations accessing loops in an equilateral pattern, that is, the path in the spatio-temporal coordinate space is a dotted line. In this case, a simple linear function can predict which location will be reaching in the near future.